On Data Science and a Quest for Justice

As long as we apply data science to society, we should remember that our data may have flaws, biases, and absences. That is one motif of MIT Associate Professor Catherine D’Ignazio’s new book, “Counting Feminicide,” published this spring by the MIT Press. In it, D’Ignazio explores the world of Latin American activists who began using media accounts and other sources to tabulate how many women had been killed in their countries as the result of gender-based violence — and found that their own numbers differed greatly from official statistics.

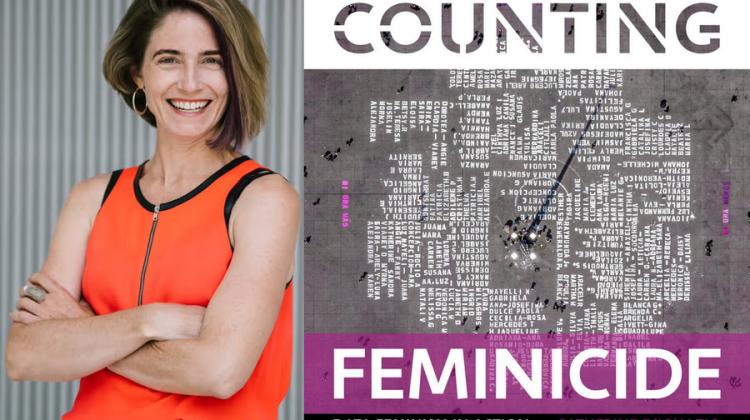

Some of these activists have become prominent public figures, and others less so, but all of them have produced work providing lessons about collecting data, sharing it, and applying data to projects supporting human liberty and dignity. Now, their stories are reaching a new audience thanks to D’Ignazio, an associate professor of urban science and planning in MIT’s Department of Urban Studies and Planning, and director of MIT’s Data and Feminism Lab. She is also hosting an ongoing, transnational book club about the work. MIT News spoke with D’Ignazio about the new book and how activists are expanding the traditional practice of data science.

Q: What is your book about?

A: Three things. It’s a book that documents the rise of data activism as a really interesting form of citizen data science. Increasingly, because of the availability of data and tools, gathering and doing your own data analysis is a growing form of social activism. We characterize it in the book as a citizenship practice. People are using data to make knowledge claims and put political demands out there for their institutions to respond to.

Another takeaway is that from observing data activists, there are ways they approach data science that are very different from how it’s usually taught. Among other things, when undertaking work about inequality and violence, there’s a connection with the rows of data. It’s about memorializing people who have been lost. Mainstream data scientists can learn a lot from this.

The third thing is about feminicide itself and missing information. The main reason people start collecting data about feminicide is because their institutions aren’t doing it. This includes our institutions here in the United States. We’re talking about violence against women that the state is neglecting to count, classify, or take action on. So, activists step into these gaps and do this to the best of their ability, and they have been quite effective. The media will go to the activists, who end up becoming authorities on feminicide.

Q: Can you elaborate on the differences between the practices of these data activists and more standard data science?

A: One difference is what I’ll call the intimacy and proximity to the rows of the data set. In conventional data science, when you’re analyzing data, typically you’re not also the data collector. However these activists and groups are involved across the entire pipeline. As a result, there’s a connection and humanization to each line of the data set. For example, there is a school nurse in Texas who runs the site Women Count USA, and she will spend many hours trying to find photographs of victims of feminicide, which represents unusual care paid to each row of a dataset.

Another point is the sophistication that the data activists have around what their data represent and what the biases are in the data. In mainstream AI and data science, we’re still having conversations where people seem surprised that there is bias in datasets. But I was impressed with the critical sophistication with which the activists approached their data. They gather information from the media and are familiar with the biases media have, and are aware their data is not comprehensive but is still useful. We can hold those two things together. It’s often more comprehensive data than what the institutions themselves have or will release to the public.

Q: You did not just chronicle the work of activists, but engaged with them as well, and report about that in the book. What did you work on with them?

A: One big component in the book is the participatory technology development that we engaged in with the activists, and one chapter is a case study of our work with activists to co-design machine learning and AI technology that supports their work. Our team was brainstorming about a system for the activists that would automatically find cases, verify them, and put them right in the database. Interestingly, the activists pushed back on that. They did not want full automation. They felt being, in effect, witnesses is an important part of the work. The emotional burden is an important part of the work and very central to it, too. That’s not something I might always expect to hear from data scientists.

Keeping the human in the loop also means the human makes the final decision over whether a specific item constitutes feminicide or not. Handling it like that aligns with the fact that there are multiple definitions of feminicide, which is a complicated thing from a computational perspective. The proliferation of definitions about what counts as feminicide is a reflection of the fact that this is an ongoing global, transnational conversation. Feminicide has been codified in many laws, especially in Latin American countries, but none of those single laws is definitive. And no single activist definition is definitive. People are creating this together, through dialogue and struggle, so any computational system has to be designed with that understanding of the democratic process in mind.